A spotlight on biotech R&D operations and one company’s approach to improving it

One of the things I love most about my job is the opportunity to deep dive into different industries – immersing myself into the pain points, shifting market dynamics, headwinds and tailwinds for tech, and, my favorite part, meeting the founders bringing new solutions to market.

While I get a chance to invest in only a small number of founders each year, I meet dozens more who are doing incredible things to reinvent the industries they operate in. This spotlight will be the first of a regular series where I’ll showcase some of the companies I’ve met along the way, and the work they’re doing to build new solutions for some of the world’s biggest challenges.

Earlier this year, we shared our thoughts on one of, if not, the biggest problems facing the life science industry – declining R&D productivity – and outlined levers at different stages of the R&D lifecycle where tech could have an impact.

For an industry defined by milestones, it’s only natural to think about innovation applied to each phase like this (e.g., “AI for target validation,” “AI for lead optimization,” “AI for IND filing,” etc.). This is more or less what’s reflected in the market too. There are lots of point solutions, each promising to make their dent on a discrete phase of R&D. And not for nothing – there’s lots of opportunity for improvement there.

But really, when you take a step back, you realize there’s a common denominator for much of the inefficiency in the system. It’s a cultural thing, a way of operating that’s so ingrained in the nature of R&D. It’s still very academic. Unlike other industries where R&D is more process-oriented, in life science R&D, a lot more of the work is exploratory – and it needs to be by design. But over indexing on protecting that freedom has left the industry void of operational rigor. For businesses generating so much data, it’s shocking how little of it is used in R&D operations.

This inefficiency has created an enormous opportunity for tech. If a vendor can drive efficiency gains at the scale of the entire operating system – by reinforcing operational focus and ensuring clear and consistent data, frameworks, and governance around decisions – it can bring life science R&D out of academia and into industry.

One company with that goal in mind is Kaleidoscope Bio.

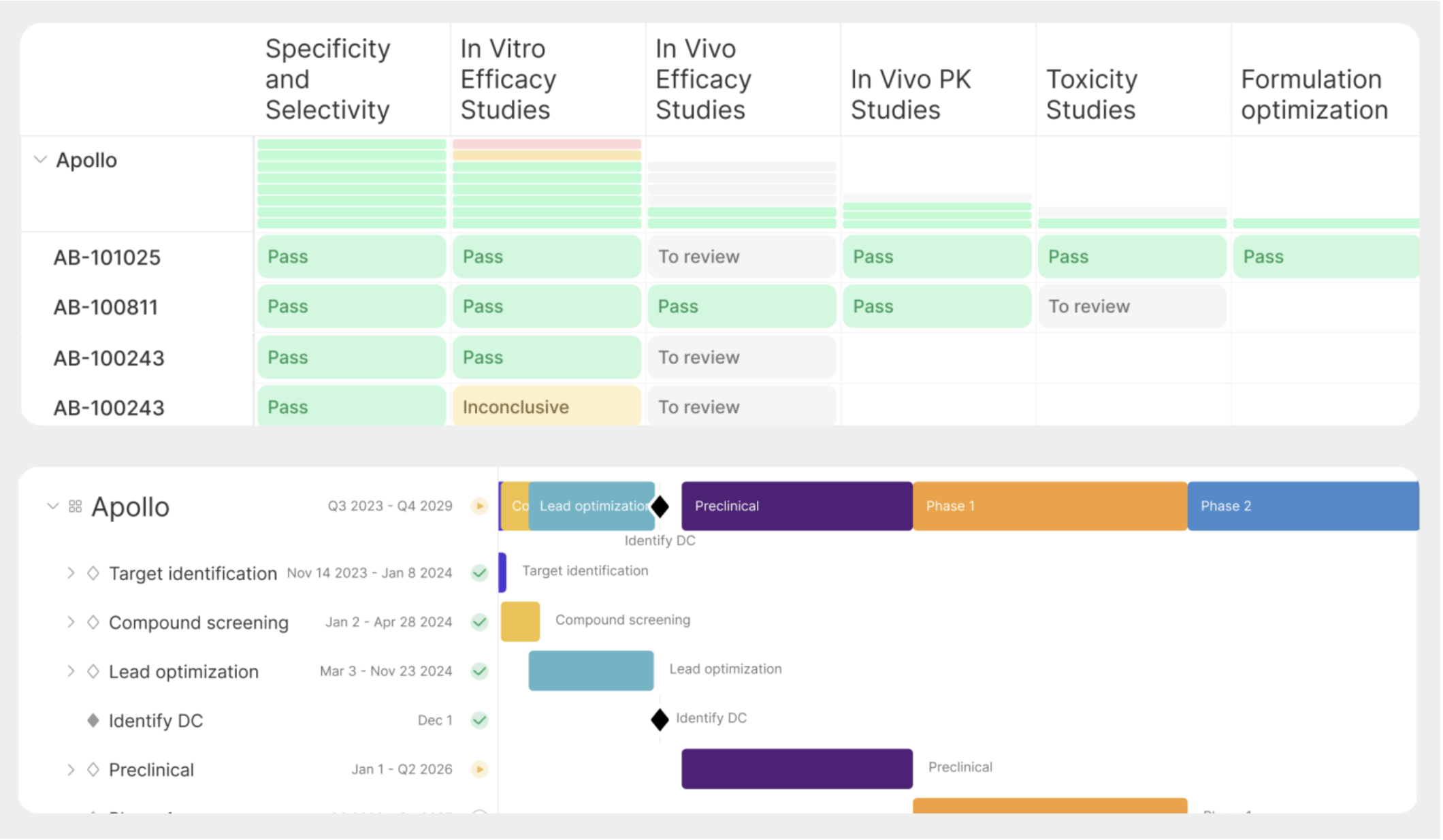

Kaleidoscope is building the first R&D operations platform purpose-built for biotech. While electronic lab notebooks (ELNs) have become the surface where science is recorded, Kaleidoscope will be where all R&D decisions are made and collaborative work is actioned. Its intuitive, integrated data and project management platform will unlock new ways of working and making decisions, thrusting the biotechs using Kaleidoscope into a different stratosphere from their peers. They will be able to answer questions they can’t answer today, identify trends they’re presently overlooking, automate downstream R&D data-intensive processes (e.g., drafting IND submissions or patent filings), leverage AI in new ways, and more. All these benefits will compound into more efficient and more successful R&D programs, which is the holy grail for a growing biotech.

What does this mean in practice? How can software fundamentally change how R&D operations are done and, accordingly, drive gains in R&D productivity? We sat down with Bogdan Knezevic, Co-Founder & CEO of Kaleidoscope.bio, to find out. The following is an output of our conversations over the last year and is meant to be an illustrative example of a pioneer in this emerging category.

The problem

Biotech is an expensive, high-stakes game of chasing inflection points. Companies are burning tons of cash quickly and outcomes are binary: either they get asset(s) to market, or they shut down. In this context, driving productivity and efficiency is not a nice-to-have, it’s table stakes.

The ability to reproduce scientific findings and rapidly build off each other’s work are foundational to the field. Effective R&D involves constantly making critical decisions based on thousands of expensive experiments over many years. And decisions to run clinical trials, costing hundreds of millions of dollars, critically hinge on the connectivity, traceability, and accuracy of preclinical scientific results.

Unfortunately, that data is inconsistently formatted and stored across disparate systems (largely based on scientists’ preferences) and change management is a wholly ineffective strategy to force standardization. It’s nearly impossible to get a clear picture of who is doing what, where data sits, why experiments are or aren’t done, and how all the scientific projects are interconnected. Even answering a straightforward question like “how did we get this figure/result?” is extremely difficult.

Without an R&D-native tool to aggregate, standardize, compare, and track R&D data efficiently, organizations typically rely on PowerPoints, emails, meetings, and individual memory to drive decision-making. This is neither robust nor scalable.

What that means in practice is that whenever someone – whether it’s a program manager, Head of Research, Chief Science Officer, a strategy/corporate dev person, CFO, or CEO – asks “what is the status of our R&D programs?”, an R&D or business operations person must go around to each scientist, ask them to take time away from the bench to aggregate all of the experimental data they’ve generated recently (which can be very difficult to search for), and then try to stitch it together manually in a PowerPoint slide or Excel file. This process consumes 10+ hours each week (20-25% of their time) for that R&D/business ops person and 2-3 months per year for the organization, on top of taking precious experimental time (which is far more expensive time) away from scientists.

Existing solutions to this problem are not sustainable; stitching together a slew of generic software either doesn't work or breaks at scale, while building custom in-house software is extremely expensive and hard to maintain.

Critically, these approaches leave the R&D organization vulnerable to erroneous data and major gaps in data visibility. That means important trends, correlations, and scientific conclusions get completely overlooked or misinterpreted, leading to poor hand-offs between teams (e.g., from pharmacology to in-vitro screening or between the wet lab and computational biology teams), duplicative experiments, prolonged investment of resources into programs that will inevitably fail, underinvestment in programs showing signs of promise, etc. All of this creates existential threats to a biotech organization: needlessly burning cash, poor decision-making, failure to hit inflection points, and ultimately low chances of success getting to market.

Several meaningful technological and macro trends are converging to make a data-driven R&D operations platform a necessity for biotechs today:

As the industry shifts to more biologically derived, large molecule drugs (vs. chemical small molecule drugs) and more personalized approaches to medicine, biotechs require a fundamentally different R&D process that is more data-intensive, collaborative, and iterative.

R&D is getting more expensive and more challenging, making productivity an acute and growing problem. Complexity of targets, modalities, diseases is going up. Being razor-sharp with what assets and programs you’re prioritizing and deploying capital to is table-stakes. For biotechs, operational efficiency can be the difference between life and death.

Size, complexity, and volume of data is growing incredibly fast. This compounds all the traditional challenges in R&D and underscores the importance of using software that lets you leverage and treat data properly.

Rate of data exchange is compounding, both internal (heterogeneous teams) and external (outsourcing, partnership-driven business models, hand-offs between SME and large enterprise). How organizations track, label, organize, and share data has never been more important.

Computation is playing more and more of a critical role. Both in terms of the increasing number of ML-driven companies, but also with respect to opportunities for more ‘traditional’ biotechs to leverage computational advances. New ways of working necessitate tools that make this more native and seamless.

The solution

With Kaleidoscope, teams can aggregate, track, and summarize the key R&D data that powers their most critical decisions and collaborate more effectively across teams (both internally and externally), slashing operating costs, speeding time to market, and driving more revenue earlier.

For example, if Kaleidoscope can drive 20% efficiency gains, this is both ~$400M in savings per company per asset on market (reduction in R&D spend) and ~$1B in extra revenue per company per asset on market (faster path to market = more patent exclusivity = more years of exclusive sales).

How does it work?The heart of the Kaleidoscope platform is its data engine. Kaleidoscope has integrations with all the dominant industry tools (e.g., electronic lab notebooks like Benchling or CDD, Excel, databases, etc.) and pulls all the disparate, siloed, irregularly formatted R&D data into a central place, standardizing it using an industry-specific data ontology, defined for the first time by Kaleidoscope. To do this, they’ve developed reusable libraries for parsing different data formats and created abstraction layers that normalize varied data sources. Importantly, this is more than a relational database; it contains a flexible graph-like structure for compound relationships that can handle complex scientific relationships while remaining intuitive. It has intelligent reference/lookup systems that provide relational-like capabilities while hiding complexity from users. So, they’ve done the hard work to make this all seem simple for end users.Scientists can continue to work and record data where and how they always have, without any behavior change required. And for the first time, R&D leaders, and company executives can get near real-time insight – and net new insights previously impossible – from the data without having to manually compile and transform it first. There are a few vectors of value in just this:

Time saved on data aggregation & comprehension → more time for science

Organized, enriched data → better, timelier OKR tracking, decision making, and IP filing

Transparent, secure data sharing with research collaborators → better communication, collaboration → more efficient R&D

Tool consolidation: eliminating the need to navigate multiple platforms like Google Drive, CDD Vault, ELNs, Excel, and PowerPoint daily.

The platform also serves as a focusing mechanism for teams — a way to intimately tie what a biotech’s critical goals are to the data that they need to generate against those. A system like this makes it massively easier for scientific leaders to focus the work being done and help the team understand what, precisely, they need to de-risk and why (and how their experiments are contributing to this). This can help move the organization from a more lax, academic way of operating to a more rigorous, data-driven one.But what becomes exponentially more interesting is what Kaleidoscope can build since it’s enmeshing critical, standardized R&D data with important operational context (otherwise hidden institutional knowledge/behavior) for the first time. As just a few examples:

Prioritization of R&D programs: Automatically identifying patterns in R&D data to recommend prioritization of one program over another based on likelihood of success, alignment with strategic goals, or return on investment.

Trend identification and predictive analytics: Leveraging historical experimental data to detect trends and correlations that human analysts might miss, such as subtle indicators of efficacy or safety concerns.

Optimization of experimental designs: Providing AI-driven recommendations to refine experimental protocols, reducing redundancy and optimizing resource allocation.

Reducing resource wastage: Identifying experiments that are unlikely to yield significant insights early, preventing wasted effort and capital.

Accelerating time to market: Facilitating faster iteration cycles by using AI to simulate outcomes or predict bottlenecks in the R&D process.

Automating regulatory documentation: Drafting complex regulatory submissions (e.g., IND filings) based on AI-driven aggregation of experimental and operational data, significantly saving time.

Benchmarking and continuous improvement: Learning from industry-wide R&D data (where permitted) to benchmark performance and recommend best practices.

Ultimately, Kaleidoscope can become the engine driving unstructured to structured data conversion, knowledge organization, work orchestration, IP management, and more. It also becomes the tool of choice for anyone looking to push the bounds of what they can do with their data. As such, the product has tons of potential to scale over time, moving from preclinical into clinical-phase tracking, and beyond dashboards into intelligent BI, IP management/IND drafting, and even AI model development. There is growing demand for all of this and what they’ve built today – which is solving a present universal pain point – is necessary to expand into these other areas in the future.With scale, Kaleidoscope is in a unique spot to learn what best-in-class R&D operations looks like, set that standard, and create a product that brings the rest of the industry up to par. In that vein, Kaleidoscope succeeding means growing the size of the entire market: more biotechs succeeding, more drugs reaching more patients earlier, and faster overall market growth as the industry builds on compounding gains.